In a nutshell: Apple says that Private Cloud Compute is the most advanced security architecture ever deployed for cloud AI compute at scale. By inviting scrutiny from the security research community, Cupertino hopes to build trust in its system and improve its security measures.

Apple has announced a significant expansion of its bug bounty program to improve the security of its upcoming Private Cloud Compute service, designed as an extension of its on-device AI model, Apple Intelligence. This cloud-based service aims to handle more complex AI tasks while maintaining user privacy.

The expanded bounty program focuses on three main threat categories: accidental data disclosure, external compromise from user requests, and physical or internal access vulnerabilities. Specifically, it is zeroing in on remote code execution, data extraction, and network-based attacks, with the maximum bounty of $1 million awarded to researchers who can identify exploits that allow malicious code to run remotely on its Private Cloud Compute servers.

Researchers can also earn up to $250,000 for reporting vulnerabilities that enable the extraction of sensitive user information or submitted prompts. Exploits that access sensitive user data from a privileged network position could net researchers up to $150,000.

Apple pointed out that the rewards for Private Cloud Compute vulnerabilities are comparable to those offered for iOS, given the critical nature of the service's security and privacy guarantees.

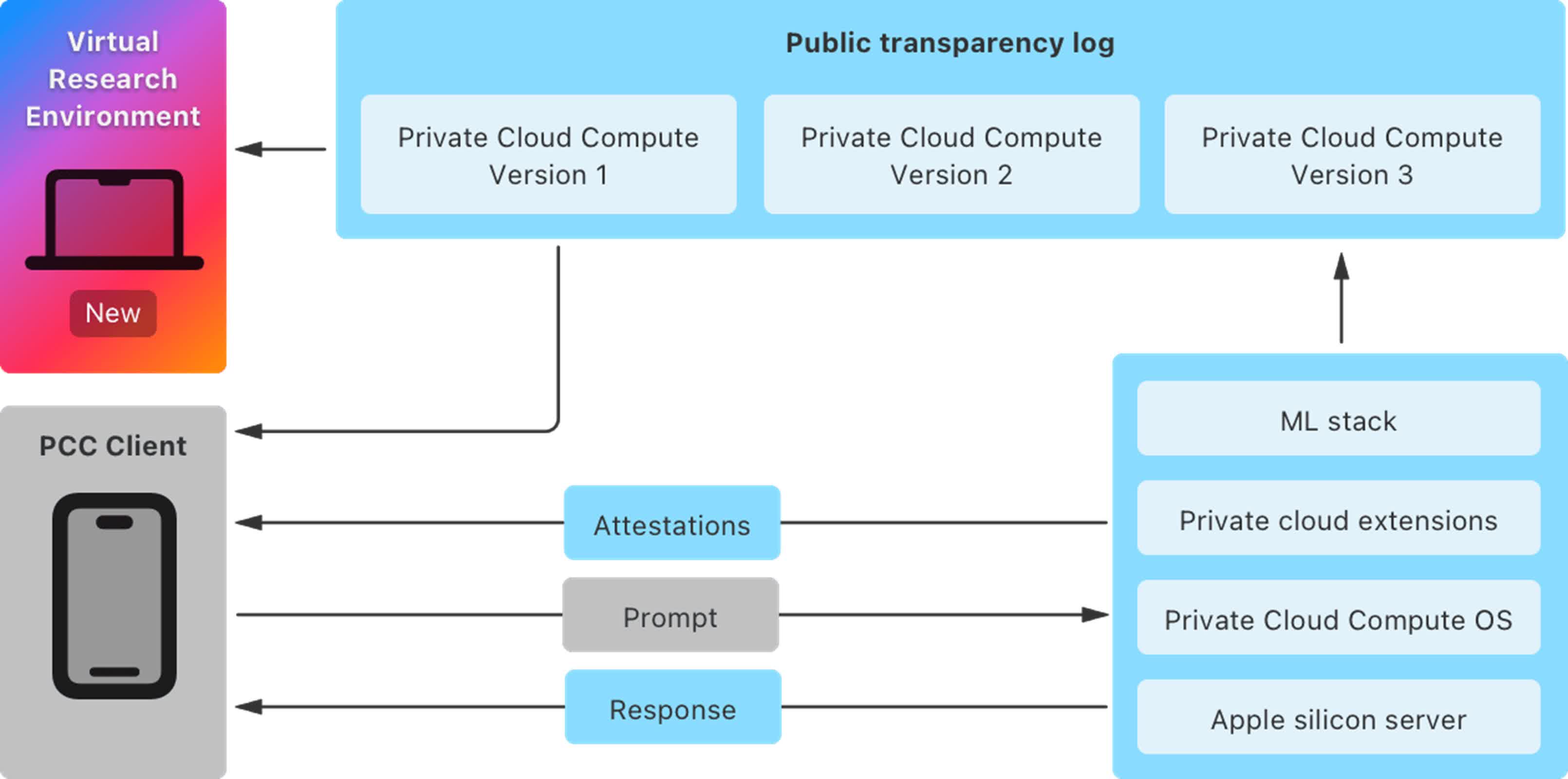

To support this initiative, Apple is providing researchers with extensive resources to inspect and verify the end-to-end security and privacy promises of Private Cloud Compute. These include a comprehensive security guide detailing the architecture and security measures, a Virtual Research Environment (VRE) that allows direct analysis of the system on Mac computers, and access to source code for key components under a limited-use license agreement.

Providing these tools is an unprecedented step for Cupertino, Apple said, aimed at building trust in the system. It has already provided third-party auditors and select security researchers early access to these resources. "Today we're making these resources publicly available to invite all security and privacy researchers – or anyone with interest and a technical curiosity – to learn more about PCC and perform their own independent verification of our claims."

The VRE, which runs on Macs with Apple silicon and at least 16GB of unified memory, offers powerful tools for examining and verifying PCC software releases, booting releases in a virtualized environment, performing inference against demonstration models, and modifying and debugging PCC software for deeper investigation.

Apple has made source code available for several components of Private Cloud Compute that cover various aspects of PCC's security and privacy implementation. These include the CloudAttestation project, Thimble project, splunkloggingd daemon, and srd_tools project.

Apple also said it will consider providing rewards for any security issue uncovered with a significant impact, even if it falls outside the published categories. "We'll evaluate every report according to the quality of what's presented, the proof of what can be exploited, and the impact to users," it said.