Misconceptions in tech are endless, but myths about low-res CPU benchmarks top our list. Let's revisit this hot topic and test the new 9800X3D at 4K to clarify what really matters.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Is the Ryzen 9800X3D Truly Faster for Real-World 4K Gaming?

- Thread starter Steve

- Start date

WhiteLeaff

Posts: 659 +1,284

Yeah, This argument is nullified by the fact that recent games are so heavy that you have to resort to upscaling and fake framerates, the CPU becomes the focus again. Games like simulators are also heavy on the CPU at any resolution.

Eldrach

Posts: 164 +167

For me it’s about future proofing. Sitting with a 240hz 1440p monitor I am usually not cpu limited as most games run around 200 fps with my 4090 and 5900x. That will change when I upgrade my gpu to a 5090 - I will hit the cpu ceiling fast, and my old AM4 system is limited by slower memory as well.

Buy a 7800 X3D or 9800 X3D and this won’t be a worry for at least the next 4 years. To be fair I also lower my settings in games like Overwatch to cap it at 240 fps, with a 5090 I probably wont have to as long as the cpu can keep up - which is why I have a 9800 X3D incoming

Buy a 7800 X3D or 9800 X3D and this won’t be a worry for at least the next 4 years. To be fair I also lower my settings in games like Overwatch to cap it at 240 fps, with a 5090 I probably wont have to as long as the cpu can keep up - which is why I have a 9800 X3D incoming

DanteSmith

Posts: 90 +153

"Is the Ryzen 9800X3D Truly Faster for Real-World 4K Gaming?"

You need to change your title. You say 4k gaming but you enable DLSS so the game is being rendered at less than 1440p NOT 4k.

That means there aren't any 4k results in your review.

You need to change your title. You say 4k gaming but you enable DLSS so the game is being rendered at less than 1440p NOT 4k.

That means there aren't any 4k results in your review.

Many people who will want a cpu like this will pair it to their rtx 4090. You guys think we will play 1080p on our 4090's? And we demand 4k tests so we can decide if we will need to skip this generations amd cpu and stick with our 7950x3d/7800x3d

There's still a "myth" here.

The other elephant in the room are all the "professional reviewers" using RTX 4090s -at 1080P mostly, in those reviews. While your article brushed upon some of the misnomers, but the most popular GPU on the Steam hardware list today is an RTX 3060/4060. Point being, it's rather unpractical for consumers to purchase a $1500-$2400.00 GPU to expound upon CPU gaming "performance". But sure the bar graphs might look better.

So run these same tests with the 9800X3D with a for more common RTX 3060/4060 at 1440P or 4K. Suddenly the "AMD X3D, 'best CPUs for gaming" becomes margin of error. But again, the former sells clicks.

Add one more thing, the multi CCD X3D CPUs like the X900 and X950 X3D CPUs. Now you need, specific bios settings, thread directing chipset drivers, Microsoft Game Bar software, and a service to make "core parking" happen correctly. That's a fragile means to get the "best" performance in gaming. X3D multicore CPUs for mostly gaming above 1080P are an expensive, niche, proposition.

PC Magazine - Best CPUs for gaming.

The other elephant in the room are all the "professional reviewers" using RTX 4090s -at 1080P mostly, in those reviews. While your article brushed upon some of the misnomers, but the most popular GPU on the Steam hardware list today is an RTX 3060/4060. Point being, it's rather unpractical for consumers to purchase a $1500-$2400.00 GPU to expound upon CPU gaming "performance". But sure the bar graphs might look better.

So run these same tests with the 9800X3D with a for more common RTX 3060/4060 at 1440P or 4K. Suddenly the "AMD X3D, 'best CPUs for gaming" becomes margin of error. But again, the former sells clicks.

Add one more thing, the multi CCD X3D CPUs like the X900 and X950 X3D CPUs. Now you need, specific bios settings, thread directing chipset drivers, Microsoft Game Bar software, and a service to make "core parking" happen correctly. That's a fragile means to get the "best" performance in gaming. X3D multicore CPUs for mostly gaming above 1080P are an expensive, niche, proposition.

PC Magazine - Best CPUs for gaming.

Last edited:

Achaios

Posts: 582 +1,503

Τhere is no question here: Steve and TECHSPOT are in the right.

In the opposite corner, we have a bunch of Intel shills.

During the heyday of Intel CPU's (ca 2014 HASWELL CPUs) these very same ppl would passionately argue for testing CPU's at 720p to show off the prowess of gaming beasts such as the 4790K. Today the very same ppl argue for the opposite: testing at 4K.

Money talks and uh well you know what walks.

*This comes from someone who still owns an Haswell CPU and a 1080.

In the opposite corner, we have a bunch of Intel shills.

During the heyday of Intel CPU's (ca 2014 HASWELL CPUs) these very same ppl would passionately argue for testing CPU's at 720p to show off the prowess of gaming beasts such as the 4790K. Today the very same ppl argue for the opposite: testing at 4K.

Money talks and uh well you know what walks.

*This comes from someone who still owns an Haswell CPU and a 1080.

Proper 4k test should be included.Τhere is no question here: Steve and TECHSPOT are in the right.

In the opposite corner, we have a bunch of Intel shills.

During the heyday of Intel CPU's (ca 2014 HASWELL CPUs) these very same ppl would passionately argue for testing CPU's at 720p to show off the prowess of gaming beasts such as the 4790K. Today the very same ppl argue for the opposite: testing at 4K.

Money talks and uh well you know what walks.

*This comes from someone who still owns an Haswell CPU and a 1080.

If I look at Techspot test, it makes it look like upgrading my 5800x3d will give me +-30fps in games.

If I look at Techpowerup that includes 4k testing in their reviews, I will see that in most games at 4k I will only gain +-3fps. I think the difference between the 9800x3d and 5800x3d was only 8fps average over all the games they use for testing.

So if I was one of those people that just rely on one site, it would seem worthwhile to spend $500 on a system upgrade.

Meanwhile I would only gain a few fps for my outlay.

There were plenty of people that said that testing at 1080p doesn't really show realistic results when Zen and Zen+ were released. Just check the reviews of those.Τhere is no question here: Steve and TECHSPOT are in the right.

In the opposite corner, we have a bunch of Intel shills.

During the heyday of Intel CPU's (ca 2014 HASWELL CPUs) these very same ppl would passionately argue for testing CPU's at 720p to show off the prowess of gaming beasts such as the 4790K. Today the very same ppl argue for the opposite: testing at 4K.

Money talks and uh well you know what walks.

*This comes from someone who still owns an Haswell CPU and a 1080.

I understand the reason to test at 1080p, but at the same time I'm aware that at higher resolutions, and with weaker GPUs, the difference between CPUs pretty much disappear in most games. But you can often see people saying on how much better the newest X3D CPUs is going to be for someone that is playing at 1440p or 4K with a 4070, when in reality the CPU is barely going to make any difference.

While I didn't have a Zen or Zen+ CPU, if I had bought a new CPU during that time, I would've certainly gone with it over Intel, the same reason why I went with Alder Lake over the 5800X3D, because I don't have a flagship GPU and play at high resolutions, so I rather have better overall CPU performance than a small gaming performance improvement that likely won't be noticeable.

Also testing using DLSS Balanced mode, which is sub 1440p, with a 4090 and 9800X3D, while arguing it's more real-world than native is crazy to me.

The poll said most 4K gamers use DLSS Quality mode, while about the same amount play with DLSS Balanced as Native, but you for some reason didn't test the most used one?

And that's ignoring that very likely most of the users that answered the poll aren't using a 4090, particularly the ones using DLSS balanced.

A full native 4K test would've been much more informative, as that would show that in some cases there would be some differences between CPUs, and for people that care about specific games, or genres, could benefit from going with a faster CPU even at 4K, while in most cases the CPU will make no difference at all.

Proper 4k test should be included.

If I look at Techspot test, it makes it look like upgrading my 5800x3d will give me +-30fps in games.

If I look at Techpowerup that includes 4k testing in their reviews, I will see that in most games at 4k I will only gain +-3fps. I think the difference between the 9800x3d and 5800x3d was only 8fps average over all the games they use for testing.

So if I was one of those people that just rely on one site, it would seem worthwhile to spend $500 on a system upgrade.

Meanwhile I would only gain a few fps for my outlay.

Average FPS is not what matters when considering a gaming CPU. Exactly zero people experience an average FPS of many games when they play a game.

It's 1% lows in the specific game you're playing which most strongly affect gameplay. Look at those and see of those numbers are good enough for you. If not, then you need a better CPU. Averages are just a general indicator to get you looking at the right range of CPUs.

There's still a "myth" here.

The other elephant in the room are all the "professional reviewers" using RTX 4090s -at 1080P mostly, in those reviews. While your article brushed upon some of the misnomers, but the most popular GPU on the Steam hardware list today is an RTX 3060/4060. Point being, it's rather unpractical for consumers to purchase a $1500-$2400.00 GPU to expound upon CPU gaming "performance". But sure the bar graphs might look better.

So run these same tests with the 9800X3D with a for more common RTX 3060/4060 at 1440P or 4K. Suddenly the "AMD X3D, 'best CPUs for gaming" becomes margin of error. But again, the former sells clicks.

Add one more thing, the multi CCD X3D CPUs like the X900 and X950 X3D CPUs. Now you need, specific bios settings, thread directing chipset drivers, Microsoft Game Bar software, and a service to make "core parking" happen correctly. That's a fragile means to get the "best" performance in gaming. X3D multicore CPUs for mostly gaming above 1080P are an expensive, niche, proposition.

PC Magazine - Best CPUs for gaming.

There is no myth.

When testing the best CPUs available for gaming, you don't gimp them with a 3/4060 or you will not get any relevant data for how fast it is. You use the best GPU available at low resolutions instead. Steve already addressed this at length in this review.

But the real issue here is:

How effing stupid do people think gamers are? Does anyone making the argument that a 9800X3D is useless for 3/4060 gaming think that someone who spent $2-300 on a GPU would consider a $500-600 CPU? Do you actually think a 3060 gamer is swayed to buying a 9800X3D by a glowing review? Anyone who makes this argument is basically saying that 3060 buyers are a pack of effing *****s.

I give the average gamer one hell of a lot more credit than that. They can very likely figure out that the 9800X3D is the very best gaming CPU out there. And then buy a 7600 or 12600 which is a better match to their system.

Frankly, at this point the only people who still argue against 1080p CPU testing are Intel shills who will change their tune the moment Intel produces a better gaming CPU. It is the only explanation for the willful misunderstanding and mischaracterisation of the facts presented. It is getting into flat earth territory, at some point you just need to walk away and let people keep their delusions, particularly when they aren't based in ignorance but ideology.

Average FPS is not what matters when considering a gaming CPU. Exactly zero people experience an average FPS of many games when they play a game.

It's 1% lows in the specific game you're playing which most strongly affect gameplay. Look at those and see of those numbers are good enough for you. If not, then you need a better CPU. Averages are just a general indicator to get you looking at the right range of CPUs.

Thanx for the info, I didn't know that. The big numbers look better.

I still stand by what I typed, "proper 4k test should be included"

It would also mean less people asking on the forums, if they should upgrade their cpu for 4k gaming..

I genuinely still can't understand why people don't get the reason for testing like this.

Imagine a 16 lane drag strip with all the CPUs lined up for the race. 3. 2. 1. Go!

All CPUs are off and blitz down the track but there is a pace car out (game @ 4k) and it's preventing all the CPUs from passing because they're waiting on the pace car (GPU bound/bottleneck). The CPUs are jockeying back and forth but still held back by the pace car and essentially arrive at the finish line together.

Now, imagine the same race but without that pace car (game is now @ 1080p) meaning the CPUs are not held back waiting for the pace car but are free to rip down the track as fast a fluffing possible.

You've now efficiently tested the CPU and removed any constraints from it.

The end!

Imagine a 16 lane drag strip with all the CPUs lined up for the race. 3. 2. 1. Go!

All CPUs are off and blitz down the track but there is a pace car out (game @ 4k) and it's preventing all the CPUs from passing because they're waiting on the pace car (GPU bound/bottleneck). The CPUs are jockeying back and forth but still held back by the pace car and essentially arrive at the finish line together.

Now, imagine the same race but without that pace car (game is now @ 1080p) meaning the CPUs are not held back waiting for the pace car but are free to rip down the track as fast a fluffing possible.

You've now efficiently tested the CPU and removed any constraints from it.

The end!

Take a look in forums of popular PC sites and you will notice people recommending or buying CPUs like the 7800X3D and 9800X3D while using a 4070Ti or lower at 1440p or 4K.There is no myth.

When testing the best CPUs available for gaming, you don't gimp them with a 3/4060 or you will not get any relevant data for how fast it is. You use the best GPU available at low resolutions instead. Steve already addressed this at length in this review.

But the real issue here is:

How effing stupid do people think gamers are? Does anyone making the argument that a 9800X3D is useless for 3/4060 gaming think that someone who spent $2-300 on a GPU would consider a $500-600 CPU? Do you actually think a 3060 gamer is swayed to buying a 9800X3D by a glowing review? Anyone who makes this argument is basically saying that 3060 buyers are a pack of effing *****s.

I give the average gamer one hell of a lot more credit than that. They can very likely figure out that the 9800X3D is the very best gaming CPU out there. And then buy a 7600 or 12600 which is a better match to their system.

Sure someone getting a $500 CPU with a $300 GPU isn't that likely, but plenty of people might think that when they're getting a $700+ GPU, that super amazing CPU every reviewer is calling the best gaming CPU ever is going to be worth it, even though they would get significantly better performance in most cases by going with a $250 CPU and upgrading the GPU to the next tier.

Reviews should also show workloads that don't benefit from the greatest CPU, not only the workloads where the difference is noticeable. It's important to people looking into upgrading to know if the new CPU they are looking at are going to bring actual, noticeable improvements to their experience and specific workloads. Not only if CPUs are faster in a possible future where they upgrade their GPU to the next gen flagship and/or play at a low resolution.

Last edited:

PandaBacon

Posts: 10 +6

"Is the Ryzen 9800X3D Truly Faster for Real-World 4K Gaming?"

You need to change your title. You say 4k gaming but you enable DLSS so the game is being rendered at less than 1440p NOT 4k.

That means there aren't any 4k results in your review.

The video/article is for those who polled that they play 4k games upscaled. Not the 9% who said they play native. Can't please everyone.

Toliandar

Posts: 6 +4

I think both 1080p and 2160p benchmarks are educational. You need both to understand a) the cpu and b) what the cpu can do for your game or similar games, at different quality settings.

I am primarily a WoW player, and also use a 4K monitor at 120Hz. Therefore, I look to the ACC results as the best indicator of what the CPU can offer me. Homeworld 3 also has results that are reasonable to apply to WoW. Still, WoW has its own unique challenges and I think it would be interesting if they were investigated. Alas, this would take cooperation between the reviewer and large group of players. For mythic raid testing, that cooperation from the public has further challenges that I don't expect to be overcome.

So a spectrum of results for games that are reasonably applicable, is appreciated.

I am primarily a WoW player, and also use a 4K monitor at 120Hz. Therefore, I look to the ACC results as the best indicator of what the CPU can offer me. Homeworld 3 also has results that are reasonable to apply to WoW. Still, WoW has its own unique challenges and I think it would be interesting if they were investigated. Alas, this would take cooperation between the reviewer and large group of players. For mythic raid testing, that cooperation from the public has further challenges that I don't expect to be overcome.

So a spectrum of results for games that are reasonably applicable, is appreciated.

brucek

Posts: 1,947 +3,074

Doing the research at 1080p is fine. Publishing articles that leave non-sophisticated readers with the impression that purchasing a shiny new CPU will have drastic impact on their gaming experience is not (when it won't).

Most of the tech reviewers who do the 1080p testing will say all the appropriate disclaimers if you happen to catch the right place in the right article or video. Not all publications will ensure that message has much chance of getting through though. For example, failing to balance an article done mostly at 1080p with even one single chart depicting the more muted differences in other use cases could strike some as deceptive.

Most of the tech reviewers who do the 1080p testing will say all the appropriate disclaimers if you happen to catch the right place in the right article or video. Not all publications will ensure that message has much chance of getting through though. For example, failing to balance an article done mostly at 1080p with even one single chart depicting the more muted differences in other use cases could strike some as deceptive.

It's really remarkable the lengths Steve is going to, to skate around and pigeonhole the argument into something that it isn't, just to avoid acknowledging what it actually is. I have to assume it's because acknowledging the argument as it actually is will unavoidably give legitimacy to it - and then he'd feel embarrassed.

By the way, I'm not dead-set on seeing HU do higher-res testing. But the repeated misrepresentation of the argument they're trying to fight makes it worth calling out and addressing in detail.

To start with, in Steve's resolution/setting surveys, more than twice as many people voted that they game in 4k at Quality settings instead of Balanced and Performance combined, yet he chose to show 4k Balanced benchmarks. Why? Or why not show native 1440p benchmarks, since that was overall the most popular demographic?

Here are some of the mischaracterizations of the topic he put into his article, which I feel uses a lot more words than needed precisely as a result trying to skate around the argument and needing to use more words to do that than just acknowledging the actual argument does:

Just as you don't need to know that in order to show low-res, CPU-unrestrained benchmarks, you likewise don't need to know that to show higher-res, GPU-limit benchmarks is at higher resolutions. If you show where the GPU limiting kicks in at the same max settings you used for your low-res testing in a few games, on the same hardware, people can relate that performance to their systems and pretty-easily infer what performance they'll experience from the CPU at that higher resolution and the settings they like to play at. In fact, if you customized the settings to be different than in your low-res testing, it would fail to serve the purpose of gauging where and how much GPU-limiting kicks in, because evaluating the drop-off trajectory to making an inference on how it relates to your hardware depends on the hardware remaining the same at each level of testing.

So, that's a non-excuse.

You don't need to know that, either. The same thing applies: you just need to show where the GPU limiting kicks in, and how severely at max settings in a few games, and then gamers will be able to reasonably infer how much they'll be impacted with their system and at their preferred settings. So, that's another non-excuse.

This is a strawman argument that disingenuously frames the matter as 'one or the other'. People aren't against low-resolution testing. They're against not having supplementary higher-res testing that communicates how much of the CPU's low-res performance spread vanishes when switching to the same settings at higher resolutions. They want that information so they know if it's a good idea to upgrade their CPU.

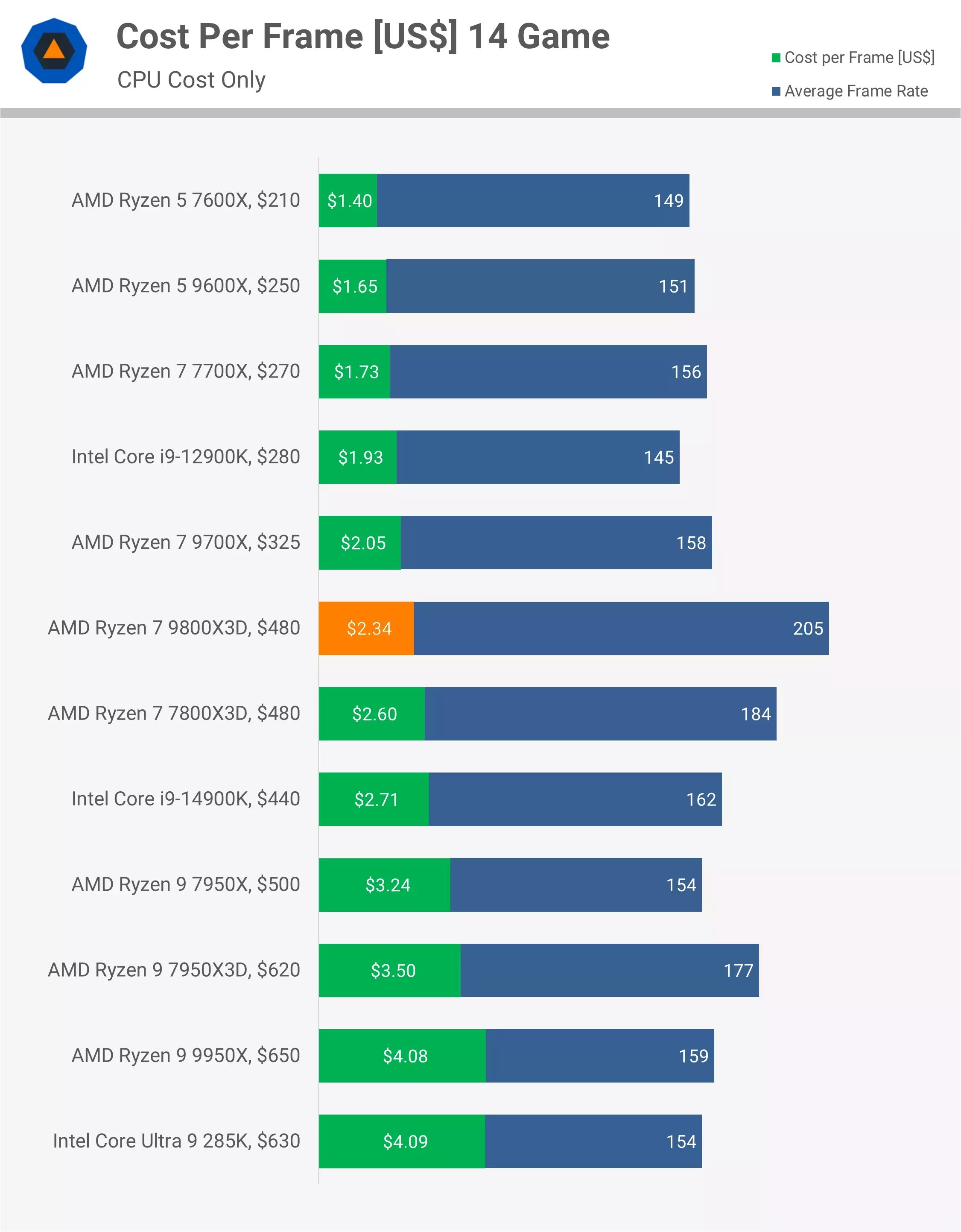

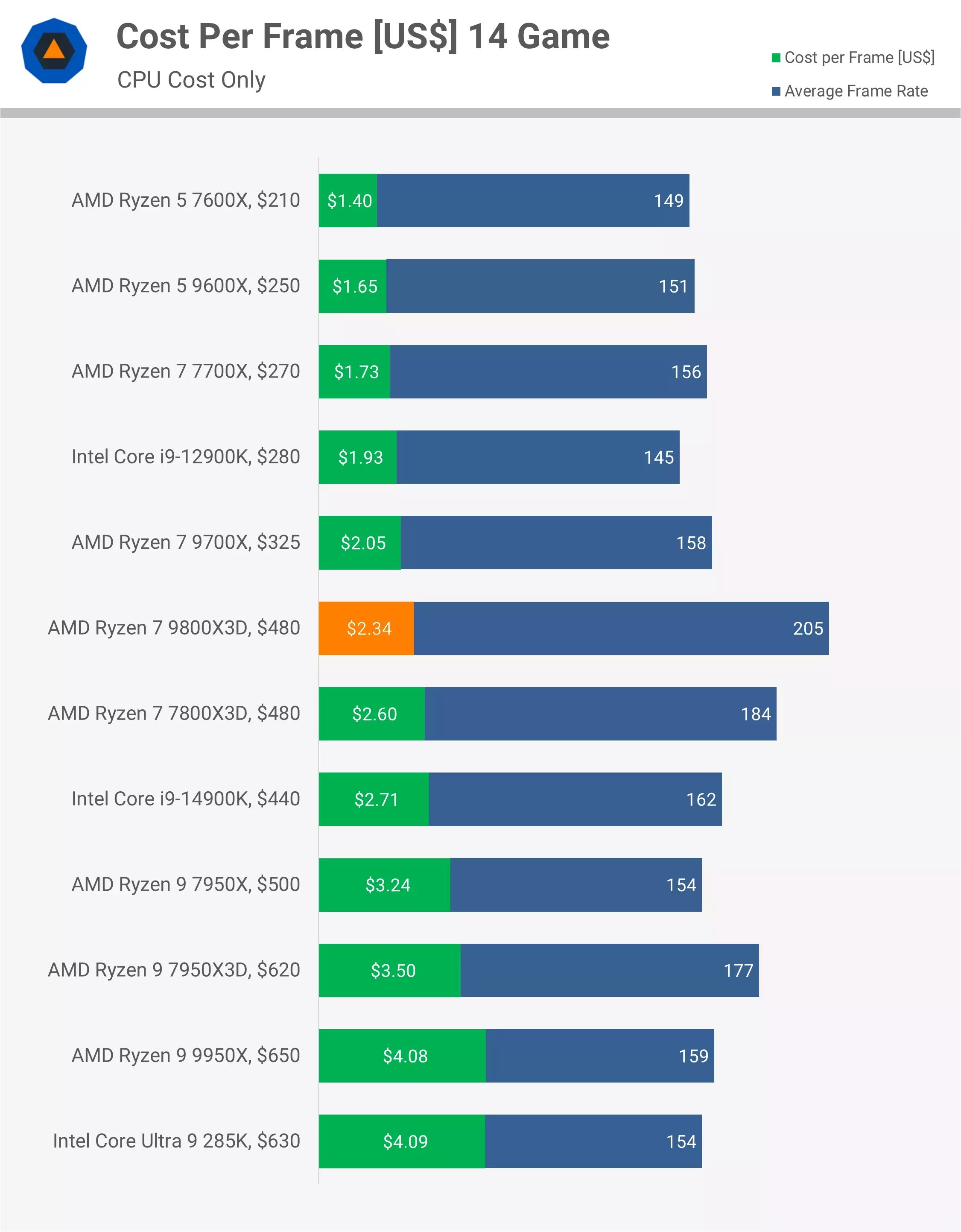

It actually doesn't do that - which is one of the big points. How can people make an informed purchasing decision based on cost-per-frame data that they never saw? The 1080p FPS spreads don't exist at 1440p or 4k (and won't for many years for perhaps the overwhelming majority of purchasers of the latest CPU). So, the cost-per-frame data shown is irrelevant for the overwhelming majority of potential purchasers.

You don't have to show higher-resolution cost-per-frame data to get the point across. You just need to show the drop-off in FPS spreads between tested CPUs at higher resolutions, and from that data people will know the cost-per-frame has changed, and can calculate the difference at the tested resolution, and infer a difference based on how their hardware compares to the test-bed hardware.

This is an appeal to authority fallacy that uses a strawman to mischaracterize the argument he's fighting. People aren't saying low-res testing shouldn't be done to evaluate the CPU's unbound performance. And it absolutely should be done. They're wanting higher-res data for an entirely different reason: to know whether upgrading is worthwhile for them. It's a matter of academic data versus practical data. A CPU review that aims to inform prospective buyers of a CPU should ideally have both.

This has been pointed-out to Steve ad nauseum, so I have little doubt that he knows he's being dishonest.

What's misleading is showing only 1080p benchmarks results, being excited in your article or video about those results, which hypes-up your viewers for the CPU, showing price-to-performance graphs, and not at all mentioning the massive caveat that likely all of the gaming performance advantage will vanish when running the CPU at higher, typical resolutions.

And in the realm of little value, it's hard to beat showing data that doesn't address the inquiry in prospective purchasers' minds, and which is the reason why they're reading or watching your review.

Honestly, this is a farce at this point. Steve just doesn't want to acknowledge the actual argument and interest, and he's saying any silliness just to dance around it.

By the way, I'm not dead-set on seeing HU do higher-res testing. But the repeated misrepresentation of the argument they're trying to fight makes it worth calling out and addressing in detail.

To start with, in Steve's resolution/setting surveys, more than twice as many people voted that they game in 4k at Quality settings instead of Balanced and Performance combined, yet he chose to show 4k Balanced benchmarks. Why? Or why not show native 1440p benchmarks, since that was overall the most popular demographic?

Here are some of the mischaracterizations of the topic he put into his article, which I feel uses a lot more words than needed precisely as a result trying to skate around the argument and needing to use more words to do that than just acknowledging the actual argument does:

The problem with this is, we don't know what games you're playing right now or the games you'll be playing in the future.

Just as you don't need to know that in order to show low-res, CPU-unrestrained benchmarks, you likewise don't need to know that to show higher-res, GPU-limit benchmarks is at higher resolutions. If you show where the GPU limiting kicks in at the same max settings you used for your low-res testing in a few games, on the same hardware, people can relate that performance to their systems and pretty-easily infer what performance they'll experience from the CPU at that higher resolution and the settings they like to play at. In fact, if you customized the settings to be different than in your low-res testing, it would fail to serve the purpose of gauging where and how much GPU-limiting kicks in, because evaluating the drop-off trajectory to making an inference on how it relates to your hardware depends on the hardware remaining the same at each level of testing.

So, that's a non-excuse.

We also don't know what settings you'll be using, as this depends largely on the minimum frame rate you prefer for gaming.

You don't need to know that, either. The same thing applies: you just need to show where the GPU limiting kicks in, and how severely at max settings in a few games, and then gamers will be able to reasonably infer how much they'll be impacted with their system and at their preferred settings. So, that's another non-excuse.

As mentioned earlier, we recognize that most of you understand this, though a vocal minority may continue to resist low-resolution testing.

This is a strawman argument that disingenuously frames the matter as 'one or the other'. People aren't against low-resolution testing. They're against not having supplementary higher-res testing that communicates how much of the CPU's low-res performance spread vanishes when switching to the same settings at higher resolutions. They want that information so they know if it's a good idea to upgrade their CPU.

Low-resolution testing reveals the CPU's true performance, helping you make informed "cost per frame" comparisons.

It actually doesn't do that - which is one of the big points. How can people make an informed purchasing decision based on cost-per-frame data that they never saw? The 1080p FPS spreads don't exist at 1440p or 4k (and won't for many years for perhaps the overwhelming majority of purchasers of the latest CPU). So, the cost-per-frame data shown is irrelevant for the overwhelming majority of potential purchasers.

You don't have to show higher-resolution cost-per-frame data to get the point across. You just need to show the drop-off in FPS spreads between tested CPUs at higher resolutions, and from that data people will know the cost-per-frame has changed, and can calculate the difference at the tested resolution, and infer a difference based on how their hardware compares to the test-bed hardware.

For those who still disagree, have you considered why every credible source and trusted industry expert relies heavily on low-resolution gaming benchmarks when evaluating CPU gaming performance? There isn't a single respected source that doesn't.

This is an appeal to authority fallacy that uses a strawman to mischaracterize the argument he's fighting. People aren't saying low-res testing shouldn't be done to evaluate the CPU's unbound performance. And it absolutely should be done. They're wanting higher-res data for an entirely different reason: to know whether upgrading is worthwhile for them. It's a matter of academic data versus practical data. A CPU review that aims to inform prospective buyers of a CPU should ideally have both.

This has been pointed-out to Steve ad nauseum, so I have little doubt that he knows he's being dishonest.

We stopped including those tests after initially caving to demand, realizing they can be misleading and offer little value.

What's misleading is showing only 1080p benchmarks results, being excited in your article or video about those results, which hypes-up your viewers for the CPU, showing price-to-performance graphs, and not at all mentioning the massive caveat that likely all of the gaming performance advantage will vanish when running the CPU at higher, typical resolutions.

And in the realm of little value, it's hard to beat showing data that doesn't address the inquiry in prospective purchasers' minds, and which is the reason why they're reading or watching your review.

Honestly, this is a farce at this point. Steve just doesn't want to acknowledge the actual argument and interest, and he's saying any silliness just to dance around it.

brucek

Posts: 1,947 +3,074

I don't see a bunch of people complaining that the wrong CPU or brand was handed the crown. To me this discussion is mostly around the lack of additional information around "and how much of that potential difference could I expect to realize for my actual use case?"In the opposite corner, we have a bunch of Intel shills.

I must be pretty effing stupid because I have no idea how to extrapolate from 1080p/4090/low results to 1440p/3080/high going just on paper. Is there a formula for that? I don't believe it's as simple as "none because you're entirely gpu limited" nor as dramatic as the single-case charts might suggest. Where exactly in-between is not an equation I can solve for myself.How effing stupid do people think gamers are?

Also, I feel important reviews like this get read not only by regulars who follow all this as a hobby, but also the drop-in crowd who is here every few years when its time to buy. They're not stupid but they could use the context and background that regulars might take for granted.

m3tavision

Posts: 1,584 +1,319

Essentially, IF YOU ARE A GAMER then it is worth the added $$ to make sure you grab an X3D version of the chip.

To illustrate this properly, just re-order the following chart in it's proper order of Frames first... to see the entire CPU industry in one metric. And it's pretty profound!

There is a reason these^ are not in order of frames, it would make buyers too aware.

To illustrate this properly, just re-order the following chart in it's proper order of Frames first... to see the entire CPU industry in one metric. And it's pretty profound!

There is a reason these^ are not in order of frames, it would make buyers too aware.

Similar threads

- Replies

- 75

- Views

- 719

- Replies

- 35

- Views

- 334

Latest posts

-

Only around 720,000 Snapdragon X laptops sold in Q3, less than one percent of PC market

- Theinsanegamer replied

-

Activision uses AI to ban nearly 20,000 Black Ops 6 cheaters

- Theinsanegamer replied

-

Tencent unveils 11" gaming handheld with glasses-free 3D, Intel Core Ultra 7 258V CPU

- Theinsanegamer replied

-

Study shows Tesla has the highest fatal accident rate of any car brand

- Theinsanegamer replied

-

The OLED Burn-In Test: 9-Month Update

- ddferrari replied

-

Company takes pre-orders for AI workstations with eight RTX 5090 GPUs, priced over $52,000

- StupidPeopleSuk replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.