The big picture: Rising power consumption from generation to generation has drawn concern regarding the future of dedicated desktop graphics cards. The issue has arisen yet again as more rumors surrounding Nvidia's upcoming Blackwell lineup emerge. However, similar rumors about the Ada Lovelace cards proved to be unfounded.

A prominent leaker claims Nvidia's upcoming RTX 5000 series graphics cards will consume significantly more power than their predecessors. The rumors might not represent final TDPs, so readers should take the information with a grain of salt.

According to @kopite7kimi, the higher-end Blackwell GPUs will see the largest wattage increases. The flagship GeForce RTX 5090 could draw 600W, a substantial jump over the 4090's 450W. Meanwhile, the 5080 might rise to 400W compared to the 4080's 320W.

more

– kopite7kimi (@kopite7kimi) September 3, 2024

However, Kopite shared similar rumors about the RTX 4000 cards two years ago, which eventually proved untrue. In the months before the Ada Lovelace generation's launch, some feared that its top GPU would consume 600W or even 800W.

The rumored RTX 4000 TDPs gradually dropped throughout 2022 until Nvidia launched the lineup with modest wattage changes compared to RTX 3000. The RTX 4090 consumes the same amount of power as the 3090Ti, the 4080's TDP is identical to the 3080, and the 4070 draws slightly less than the 3070.

Recent rumors surrounding RTX 5000 TDPs might represent Total Board Power or maximum power draw, which can differ from the wattages that most users typically experience. Furthermore, Nvidia might not have finalized the TDPs for Blackwell yet.

Projected release dates for RTX 5000 have shifted back and forth over the last few months, but the latest information suggests that the cards might debut at CES 2025 in January. AMD's upcoming RDNA4 series and Intel's Arc Battlemage lineup could appear around the same time or in late 2024.

Blackwell's flagship RTX 5090, is expected to be around 70 percent faster than the 4090 with 28 GB of VRAM and a 448-bit memory bus. Meanwhile, the 5080 could outperform the 4090 by roughly 10 percent with 16 GB of VRAM and launch before the 5090. All RTX 5000 GPUs except the mainstream desktop RTX 5060 are expected to feature GDDR7 memory. The series is based on TSMC's 5nm 4N EUV node process.

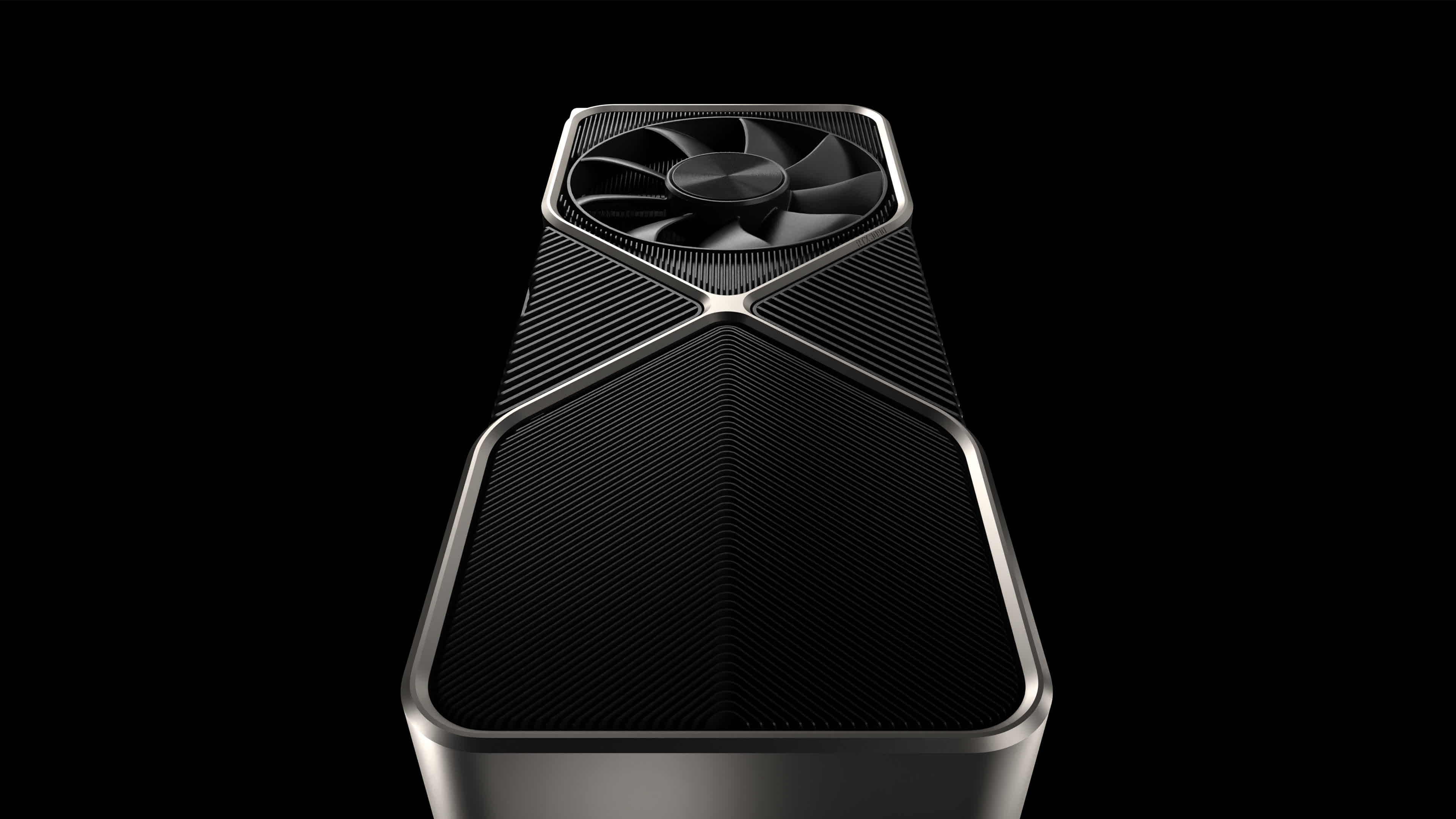

Nvidia's RTX 5090 might draw 600W, other Blackwell GPUs could also increase wattage