All I see here is some "hurr you don't actually know how it works" nonsense with zero substance to back it up. Nobody will believe you if you claim to be experienced with something ("you clearly don't have expereince with game engines, I do") but then don't actually bother explaining the things you're talking about.

Yes, that statement is absolutely correct. VRAM usage is 90% textures. It has nothing whatsoever to do with allocation, 90% of the VRAM

that is used (not allocated) is textures. Textures are the only significantly large assets that are put in VRAM. 3D models (which are just vectors) are much smaller. Framebuffers (which are bitmaps) can be numerous, but also smaller in the order of a few MBs each (a 32-bit 1080p framebuffer is just over 8 MB, a 1440p one is just under 15 MB), you'll maybe have a couple hundred MBs worth of framebuffers per game. Shader code is tiny (in the order of KBs) and easily fits in small GPU caches. The only other item that can use a lot of space in VRAM in BVH structures for ray tracing. But if you're not using ray tracing, the vast overwhelming majority of your VRAM usage will be just textures.

Wrong. Most games are made for consoles. And both the PS5 and the Series X have around 10 GB of memory to use as VRAM (on the Series X it's 10 due to how the asymmetric memory setup where teh last 6 GB are slower, on the PS5 it's more flexible and can go over 10 GB depending on how little CPU RAM the game needs).

Smart devs will give the PC version an option to degrade texture quality so those games will run well on 8 GB cards. But not every dev is a smart dev.

Absolutely incorrect. Steve himself, right here on TechSpot and on Hardware Unboxed, has done multiple videos addressing exactly this nonsense claim that "games don't need more than 8 GB".

Here is Steve comparing the RTX 3070 and RX 6800 three years after their launch, and showing how the RTX 3070 struggles in those games, even at 1080p, specifically because of its 8 GB of VRAM, while the RX 6800 with 16 GB doesn't.

Here is a follow-up to that video where he compares the RTX 3070 to a Quadro-class RTX A4000 based on the same Ampere chip but with 16 GB instead, again showing the 3070 struggles specifically because it has only 8 GB.

Here is Daniel Owen comparing the 8 GB and 16 GB versions of the RTX 4060 Ti and arriving at the same conclusion, the 8 GB card struggles in modern games while the 16 GB version doesn't.

Again, those are games running at

1080p in the videos, not "4K with the most extreme settings". And you can see that, depending on the game, that VRAM limitation can take either the form of degraded performance (lower averages and much lower 1% lows), degraded visual quality (games like Hogwarts Legacy, Forspoken and Halo Infinite simply unload textures when you run out of VRAM and you get a blurry mess on the screen), or both.

Turns out the "basic knowledge that most people don't understand" is actually something

you didn't understand.

You then proceeded to go on an AMD tirade that has nothing whatsoever to do with the VRAM issue at hand.

There is no issue really, you mentioned a few games, that still runs well on 8GB after patches. Just like some people claim that The Last of Us (AMD sponsored console port to be exact) don't run on 8GB GPUs, it runs just fine after the first patches came out.

Also, PC games are optimized for PC obviously.

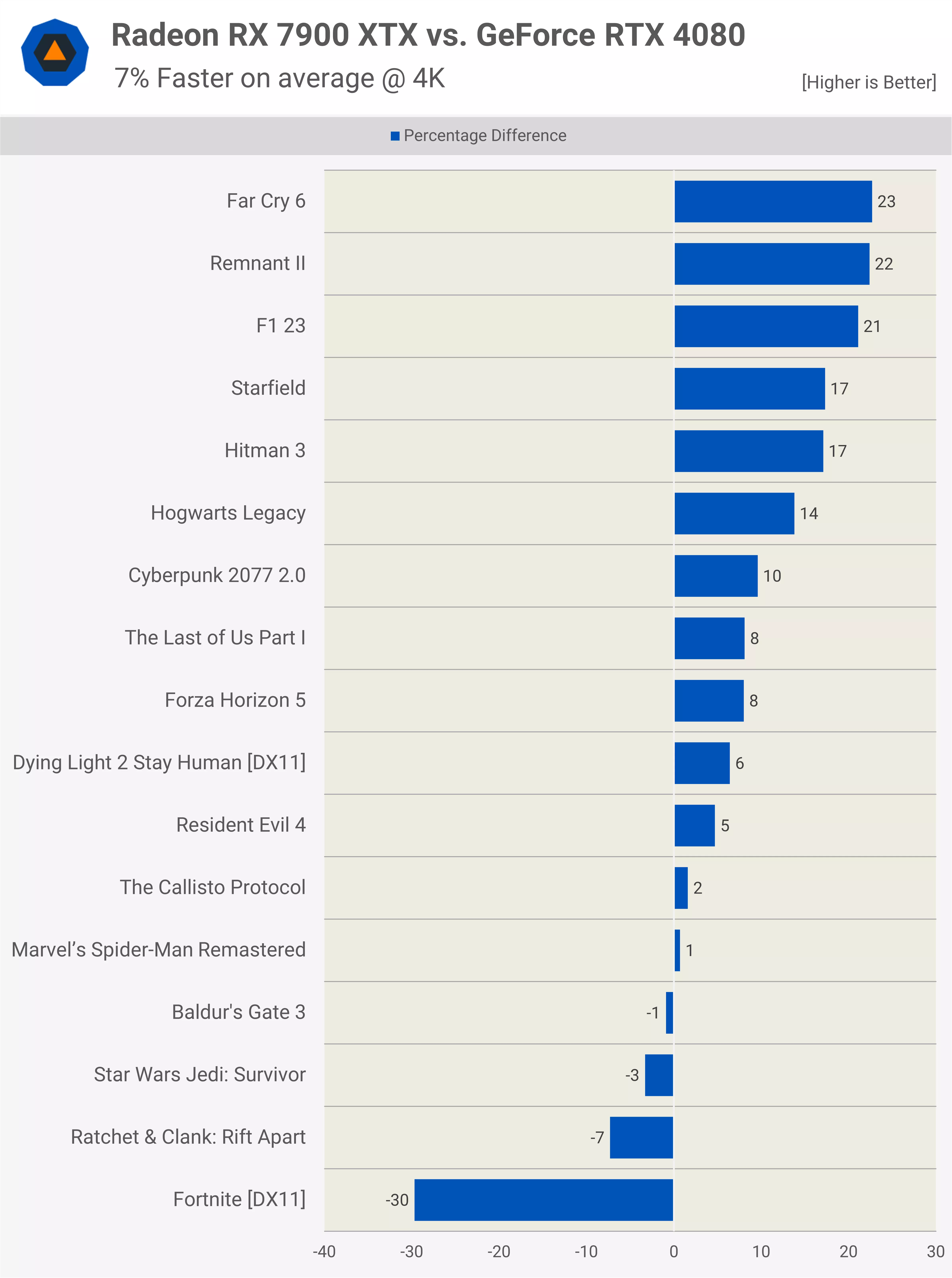

Even games developed for console / AMD in the first place, tends to run better on Nvidia anyway:

The long-awaited God of War Ragnarök is now available on PC, bringing the latest chapter of the epic action-adventure series to a whole new audience. The PC version offers enhanced visuals, featuring support for cutting-edge technologies like DLSS, FSR, and Frame Generation. In our performance...

www.techpowerup.com

7900XTX and its 24GB is closer to 4070 series than 4090/4080 series.

You can ramble all day long about VRAM and how important it is, Nvidia has 90% marketshare and still gains. Obviously AMDs approach with offering more VRAM while focussing on pure-raster performance did not work. Nvidia wins with ease in 90% of new games coming out.

Silent Hill 2 Remake lets you relive the iconic classic with modern graphics thanks to Unreal Engine 5. The PC version offers enhanced visuals, featuring support for cutting-edge technologies like DLSS, FSR and ray tracing. In our performance review, we'll look at the game's graphics quality...

www.techpowerup.com

16GB last gen Radeon 6800/6900 series with the same performance as last gen Nvidia 8GB offerings don't impress nobody.

And yeah, 3060 Ti 8GB destroys 6700XT and 3060, both with 12GB. VRAM don't help when GPU is weak, like I said.

3070 8GB even beats 6900XT 16GB in 1440p maxed out INCLUDING MINIMUM FPS. That is just sad and the reason why AMD GPU is not selling well. Not to mention that DLSS/DLAA beats FSR every single time.

4060 Ti 8GB is generally a crappy card, yet performs the same as 7800XT and 6900XT here. Enable DLSS/FSR and the 4060 Ti would be the superior option in terms of visuals.

AMD makes good CPUs but their GPUs needs work. Not just GPUs, software as well. Feature-wise AMD is years behind Nvidia. AMDs copy/paste methods did not work well.

AMD left high-end GPU market for a reason. Lets see if Radeon 8000 will change anything. Only if AMD improves FSR and brings vastly superior performance per dollar it will, oh and improves RT performance alot, because AMD GPUs takes a big performance hit in too many new games due to lacking RT perf.

Most popular game of the year:

Black Myth Wukong has over two million gamers playing the game. This souls-like action RPG is a huge hit, especially in China. Powered by Unreal Engine 5, the graphics look great, but hardware requirements are definitely not on the light side, we had to use upscaling. In our performance review...

www.techpowerup.com

AMD has lacking perf once again.

You look at VRAM, I look at performance in new games and Nvidia is the clear winner.

Not that I care much, I have 4090 but I did not buy this card because of the 24GB VRAM. I bought it because of the GPU power and feature-set.

The VRAM present on a card like 7900XTX is a waste, since the GPU can't do RT or Path Tracing anyway, and this is where you actually need alot of VRAM.

Not a single game uses alot of VRAM in rasterization only which AMD GPU owners seems to praise.

VRAM is only good if you actually needs it. If you don't need to run games on highest settings + RT in native 4K/UHD you simply don't need more than 12GB really, maybe 16GB in rare edge cases at 3440x1440 or so. Regular 1440p and max settings, 12GB is more than plenty, as you can see in the 3 links I provided, which are some of the most popular AAA PC games right now and brand new.

www.windowscentral.com